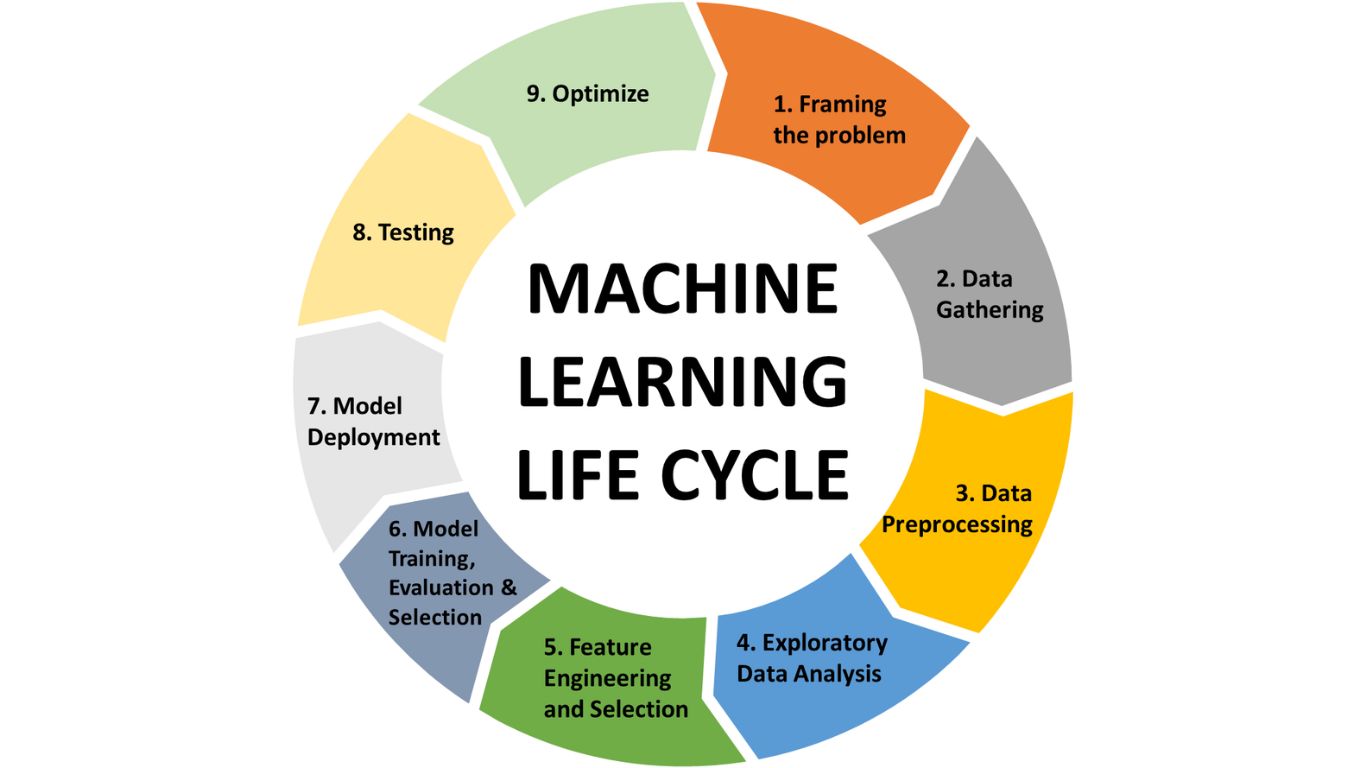

Machine Learning (ML) isn’t just about building models; it’s a systematic journey involving distinct phases, each integral to the success of the final outcome. Let’s embark on a comprehensive exploration of the nine major steps constituting the Machine Learning life cycle:

Framing the Problem

At the inception, defining the problem is paramount. It involves understanding the business objectives, determining the scope of the project, and framing it as a machine learning problem, whether it’s classification, regression, clustering, or another task.

- Understanding Business Objectives – At the onset, it’s crucial to align the ML project with the overarching business objectives. This involves comprehensive discussions with stakeholders to ascertain the problem’s context, its relevance to business goals, and the expected outcomes.

- Defining the Scope – Defining the boundaries of the problem is essential. It involves identifying constraints, limitations, and the specific area of focus within the larger context. Clear delineation prevents scope creep and ensures a targeted approach.

- Formulating as a Machine Learning Problem – Once the problem is understood and the scope is defined, it’s essential to formulate it as a machine learning problem. This step involves categorizing the problem type—whether it’s a supervised, unsupervised, or reinforcement learning task. It includes determining if the problem is a classification, regression, clustering, anomaly detection, or other types of ML problems.

- Data Requirements and Feasibility – Assessing the availability and feasibility of data is crucial. This involves identifying the types of data required, their sources, potential challenges in acquiring them, and ensuring the data’s sufficiency to address the problem adequately.

- Defining Evaluation Metrics – Establishing clear evaluation metrics is vital to gauge the success of the ML solution. Metrics could range from accuracy, precision, recall, F1-score for classification tasks, to RMSE, MAE for regression problems. Defining these metrics helps in measuring the model’s performance against set benchmarks.

- Ethical and Regulatory Considerations – Consideration of ethical implications and compliance with regulations is imperative. Identifying and addressing potential biases, privacy concerns, and legal requirements at this stage ensures responsible and lawful use of data and models.

- Documentation and Communication – Finally, documenting the problem statement, scope, objectives, and considerations is essential. This documentation serves as a blueprint for the entire ML project and aids in effective communication among team members and stakeholders.

“Framing the Problem” is akin to laying the foundation for a sturdy structure. A thorough and well-defined framing sets the trajectory for the subsequent steps in the ML life cycle, guiding the development of effective ML solutions that align with business objectives and address real-world problems comprehensively.

Data Gathering

The foundation of any ML model rests on the quality and quantity of data. Gathering diverse, relevant, and comprehensive datasets from various sources is essential. This step demands meticulousness in acquiring structured, unstructured, or semi-structured data depending on the problem at hand.

- Identifying Data Sources – The process begins with identifying potential sources of data. These sources could be internal, such as databases, logs, or company records, or external, including public repositories, APIs, or third-party vendors. Understanding the diversity and reliability of these sources is crucial.

- Data Collection Strategies – Depending on the nature of the problem, different strategies might be employed to gather data. It could involve web scraping for online data, using sensors for IoT-related data, surveys, interviews, or collaborating with partners to obtain specific datasets.

- Ensuring Data Quality and Quantity – Acquiring the right quantity and quality of data is paramount. Quality data is free from errors, duplicates, and inconsistencies, while quantity ensures that the dataset is sufficiently large to capture diverse patterns and variations.

- Data Permissions and Compliance – Respecting data permissions and ensuring compliance with data regulations is crucial. Obtaining necessary permissions, adhering to data protection laws, and maintaining data confidentiality are essential ethical considerations during data collection.

- Data Integration and Cleaning – Integrating data from various sources might require harmonization and cleaning. This involves standardizing formats, dealing with missing values, outliers, and ensuring uniformity to create a coherent dataset.

- Metadata Documentation – Documenting metadata – information about the data such as its source, format, and characteristics – is crucial. This documentation aids in understanding the dataset, ensuring its traceability and facilitating future use.

- Exploratory Data Collection – Conducting a preliminary data analysis or exploratory data collection helps in understanding the nature of the data, identifying patterns, trends, and potential biases. It guides subsequent steps in the ML process.

- Iterative Process – Data gathering is often an iterative process. As the ML project progresses, new insights might demand the collection of additional data or modifications to existing datasets to improve model performance.

- Version Control and Tracking – Maintaining version control of datasets, tracking changes, and establishing a robust system to trace data lineage is crucial. This ensures reproducibility and facilitates troubleshooting if issues arise.

The efficacy of an ML model significantly relies on the quality, diversity, and relevance of the data used. Therefore, meticulous attention to data gathering ensures a strong foundation for subsequent stages in the ML life cycle.

Data Preprocessing

Data seldom comes in a ready-to-use format. This step involves cleaning the data by handling missing values, removing outliers, and transforming data types. Data preprocessing ensures the dataset is in a consistent and suitable format for analysis.

- Handling Missing Values – One of the initial tasks involves addressing missing data points. This might involve imputation, where missing values are replaced with estimated ones based on statistical methods or using algorithms that predict missing values.

- Outlier Detection and Treatment – Identifying outliers that deviate significantly from the rest of the data is essential. Outliers can skew results, hence strategies like removing outliers or transforming them to reduce their impact are employed.

- Data Normalization or Scaling – Normalizing or scaling data ensures that features have similar scales, preventing some features from dominating the model due to larger magnitudes. Techniques like min-max scaling or standardization are commonly used.

- Encoding Categorical Variables – Many ML algorithms cannot work directly with categorical variables, so encoding them into numerical format becomes necessary. Techniques like one-hot encoding or label encoding are applied based on the nature of the data.

- Handling Imbalanced Datasets – In scenarios where one class of data significantly outweighs another, balancing techniques such as oversampling, under sampling, or using algorithms like SMOTE (Synthetic Minority Over-sampling Technique) are used to address class imbalance.

- Feature Selection – Selecting relevant features is crucial for model efficiency. Techniques like correlation analysis, feature importance, or using algorithms that automatically select features based on their contribution to the model are employed.

- Dimensionality Reduction – Reducing the number of features while retaining essential information is beneficial, especially with high-dimensional data. Techniques like Principal Component Analysis (PCA) or t-Distributed Stochastic Neighbor Embedding (t-SNE) are utilized.

- Data Discretization – Discretizing continuous data into intervals or bins simplifies complex data, making it easier for certain types of models to learn patterns or reducing noise.

- Text Preprocessing (NLP) – For text data, preprocessing involves tasks like tokenization, removing stop words, stemming or lemmatization, and vectorization to convert text into numerical format for analysis.

- Pipeline Creation – Creating a data preprocessing pipeline streamlines these tasks, ensuring consistency and reproducibility across different datasets or iterations of the model.

- Validation and Iteration – Preprocessing is often an iterative process. As models are built and evaluated, revisiting data preprocessing steps based on model performance might be necessary for improvements.

- Documentation and Logging – Documenting all preprocessing steps and keeping track of changes made to the data is vital for reproducibility and transparency in the ML process.

Data preprocessing is fundamental in ensuring the quality, consistency, and suitability of data for training ML models. It lays the groundwork for accurate and efficient model development and validation.

Exploratory Data Analysis

Understanding the data’s characteristics and relationships is crucial. Exploratory Data Analysis (EDA) involves visualizing and summarizing data to gain insights into patterns, trends, and potential correlations. It helps in understanding the nature of the data and guiding subsequent steps.

- Data Summary and Descriptive Statistics – EDA commences with obtaining an overview of the dataset. Descriptive statistics like mean, median, standard deviation, and quartiles for numerical features, and frequency counts for categorical variables provide an initial understanding.

- Data Visualization – Visualizing data through histograms, box plots, scatter plots, heatmaps, and pair plots aids in understanding distributions, correlations, trends, and outliers within the data. Visualization makes complex patterns more interpretable and intuitive.

- Correlation Analysis – Analyzing correlations between variables helps in understanding relationships. Techniques like correlation matrices or heatmaps highlight the strength and direction of relationships, aiding in feature selection and model building.

- Handling Skewed Data – Identifying and addressing skewness in data distributions is crucial. Transformations like log transformations or scaling methods can normalize skewed data for better model performance.

- Feature Relationships – Exploring relationships between different features helps in uncovering insights. Techniques like scatter plots or joint plots reveal how variables interact, potentially indicating non-linear relationships.

- Data Segmentation and Grouping – Segmenting data based on categories or features allows for insights into group-specific patterns or behaviors. Grouping data using techniques like clustering helps in identifying inherent structures.

- Outlier Identification and Analysis – Spotting outliers that deviate significantly from the norm is essential. EDA techniques help in visualizing and understanding outliers’ impact on the data and deciding on appropriate treatment strategies.

- Temporal Analysis – For time-series data, analyzing trends, seasonality, or cyclical patterns over time aids in understanding patterns that evolve with time, guiding predictive modeling.

- Identifying Data Patterns and Anomalies – Exploring patterns or anomalies in the data beyond standard statistical measures involves employing techniques like anomaly detection algorithms or visual inspection to reveal hidden insights or irregularities.

- Validation and Iteration – EDA is an iterative process. As insights are derived and models are built, revisiting EDA steps based on model performance might be necessary for improvements or to explore new hypotheses.

- Documentation and Reporting – Documenting EDA findings, including visualizations, summaries, and key insights, is crucial for communicating insights to stakeholders and team members, ensuring transparency and guiding subsequent steps in the ML process.

EDA serves as a critical step in the ML life cycle, providing valuable insights into data characteristics, patterns, and relationships that drive informed decisions during model building and validation.

Feature Engineering and Selection

Features or attributes are the driving force behind model performance. Feature engineering involves creating new features or transforming existing ones to enhance model accuracy. Feature selection determines the most relevant features, optimizing model efficiency and reducing overfitting.

- Creation of New Features – Creating new features involves deriving additional meaningful attributes from existing ones. For instance, from a timestamp, extracting features like day of the week, month, or time of day can provide valuable temporal information.

- Transformation of Features – Transforming features aims to enhance their significance or make them more suitable for modeling. Techniques like logarithmic transformations, scaling, or normalization can improve feature distributions.

- Handling of Categorical Variables – Converting categorical variables into numerical form is crucial for model compatibility. Techniques like one-hot encoding, label encoding, or target encoding prepare categorical data for model consumption.

- Imputation of Missing Values in Features – Imputing missing values in features can be critical. Techniques like mean imputation, median imputation, or using predictive models to fill missing values help maintain dataset integrity.

- Dimensionality Reduction – Reducing the number of features without losing significant information is vital, especially with high-dimensional data. Techniques like Principal Component Analysis (PCA) or feature selection algorithms like Recursive Feature Elimination (RFE) aid in selecting the most informative features.

- Feature Importance Analysis – Assessing the importance of features in predicting the target variable guides feature selection. Techniques like Random Forest Feature Importance or Gradient Boosting Machines reveal the contribution of each feature to model performance.

- Cross-Validation for Feature Selection – Utilizing cross-validation techniques helps in robustly assessing feature performance across different subsets of data, ensuring selected features generalize well to unseen data.

- Domain Knowledge Integration – Incorporating domain expertise can guide feature selection. Understanding the problem domain and the relevance of certain features enhances the creation of meaningful and predictive attributes.

- Regularization Techniques – Using regularization methods like L1 (Lasso) or L2 (Ridge) regularization can help in feature selection by penalizing less relevant features or shrinking coefficients, preventing overfitting.

- Validation and Iteration – Feature engineering and selection are iterative processes. Continuously refining and iterating through these steps based on model performance can improve the model’s predictive power.

- Documentation and Reporting – Documenting the rationale behind feature selection, transformations applied, and their impact on model performance is essential for transparency and replicability of results.

Feature engineering and selection play a pivotal role in enhancing model accuracy, reducing overfitting, and improving computational efficiency by focusing on the most informative and relevant attributes within the dataset.

Model Training, Evaluation and Selection

Training involves feeding data into ML algorithms to build models. Evaluating models using various metrics helps in assessing performance. Model selection involves choosing the best-performing model among different algorithms or architectures.

- Training ML Models –Training ML models involves feeding prepared data into various algorithms or architectures, allowing them to learn patterns, relationships, and representations. This step aims to optimize model parameters for better predictions.

- Cross-Validation – Utilizing techniques like k-fold cross-validation helps in robustly assessing model performance. It involves dividing the dataset into subsets for training and validation, iteratively testing the model’s performance on different portions of the data.

- Hyperparameter Tuning – Optimizing hyperparameters—parameters that dictate the model’s behavior but are not learned during training—helps in enhancing model performance. Techniques like grid search or random search explore different hyperparameter combinations to find the best ones.

- Model Evaluation Metrics – Evaluating models using appropriate metrics is essential. Metrics vary based on the problem type—accuracy, precision, recall, F1-score for classification, or RMSE, MAE for regression problems—allowing quantification of model performance.

- Validation and Test Sets – Splitting data into training, validation, and test sets ensures unbiased evaluation. Training on one set, tuning on another (validation), and testing on a separate set helps assess how well the model generalizes to unseen data.

- Ensemble Methods – Combining multiple models using ensemble techniques like bagging, boosting, or stacking often results in improved performance. These methods leverage the strengths of diverse models to achieve better predictive power.

- Model Interpretability – Understanding how models make predictions is crucial. Techniques like feature importance, SHAP values, or partial dependence plots help interpret and explain the model’s decisions, especially in high-stakes domains.

- Model Selection Criteria – Selecting the best-performing model based on evaluation metrics, computational efficiency, scalability, and interpretability aligns with the project’s objectives and constraints.

- Overfitting and Underfitting Mitigation – Ensuring models do not overfit (fitting excessively to training data) or underfit (failing to capture patterns) is vital. Techniques like regularization, dropout, or adjusting model complexity address these issues.

- Documentation and Reporting – Documenting model training details, hyperparameters, evaluation results, and reasons behind model selection aids in transparency, reproducibility, and communication with stakeholders.

- Continuous Improvement – Iterating through model training, evaluation, and selection iteratively refines models based on new insights or changing data, ensuring continuous improvement in performance.

Model training, evaluation, and selection are iterative processes that demand careful consideration of various factors to choose the most effective model for the given problem, ensuring robust and accurate predictions.

Model Deployment

Once a model is trained and validated, deploying it into a production environment is crucial. It involves integrating the model into existing systems or platforms to make predictions on new data.

- Preparing Models for Deployment – Before deployment, models need to be prepared for production. This involves saving model parameters, preprocessing steps, and any necessary artifacts to ensure consistency between training and deployment.

- Scalability and Efficiency – Ensuring models can handle the expected workload and perform efficiently in production environments is crucial. Optimizing model inference time and memory usage for scalability is essential, especially in high-throughput systems.

- Integration with Infrastructure – Integrating ML models into existing systems or applications requires seamless compatibility. This involves working closely with software engineers or DevOps teams to ensure smooth integration, often through APIs or microservices.

- Model Monitoring and Maintenance – Continuous monitoring of deployed models is vital. Tracking model performance, drift detection (changes in data distribution over time), and retraining schedules are necessary to maintain model accuracy.

- Security and Privacy – Implementing robust security measures to protect models and data is paramount. Techniques like encryption, access controls, and secure API endpoints safeguard models against potential threats.

- A/B Testing and Experimentation – Conducting A/B tests or experimentation with different model versions or features in production allows for iterative improvements. It helps in comparing model performance and making informed decisions.

- Fail-Safe Mechanisms – Implementing fail-safe mechanisms or fallback strategies in case of model failure or unexpected behavior ensures system resilience and prevents disruptions in production environments.

- Version Control and Rollbacks – Maintaining version control of deployed models and having mechanisms for rolling back to previous versions if issues arise ensures reliability and minimizes downtime.

- Documentation and Communication – Thorough documentation of deployment processes, configurations, and any updates made to the deployed model aids in transparency and facilitates troubleshooting or future updates.

- Collaboration and Feedback – Maintaining open communication channels between data science, engineering, and business teams allows for feedback loops, enabling improvements and updates based on real-world usage and requirements.

- Compliance and Regulations – Adhering to industry-specific regulations and compliance standards, especially concerning data privacy and ethical considerations, is essential during model deployment.

Model deployment is the culmination of the ML life cycle, where the fruits of model development and validation are brought to practical use. It involves careful planning, rigorous testing, and ongoing maintenance to ensure the successful integration of ML models into operational systems.

Testing

Testing the deployed model is essential to ensure its performance matches expectations. This step involves feeding new or unseen data to the model and evaluating its predictions against actual outcomes.

- Validation Set Testing – Using a separate validation set during model training helps assess its performance on data it hasn’t seen before. It validates how well the model generalizes to new, unseen data.

- Test Set Evaluation – The test set, kept entirely separate from both training and validation data, serves as the final checkpoint. Evaluating the model’s performance on this set gauges its real-world predictive capabilities.

- Performance Metrics – Metrics like accuracy, precision, recall, F1-score for classification, or RMSE, MAE for regression models quantify model performance. Choosing appropriate metrics aligns with the problem’s objectives.

- Confusion Matrices and ROC Curves – Confusion matrices and Receiver Operating Characteristic (ROC) curves provide detailed insights into model behavior. They showcase true positive, true negative, false positive, and false negative rates, aiding in model assessment.

- Cross-Validation Techniques – K-fold cross-validation helps ensure robustness by partitioning data into multiple subsets. It evaluates models on different splits, reducing the risk of overfitting to a specific set of data.

- Benchmarking and Baseline Models – Comparing model performance against baseline models or existing benchmarks offers valuable insights. It serves as a reference point for improvements and validates the efficacy of the developed model.

- Model Stability and Sensitivity Analysis – Testing model stability against small changes in data or parameters verifies its consistency and robustness. Sensitivity analysis assesses how variations in input impact model predictions.

- Error Analysis – Understanding model errors and misclassifications helps identify patterns or biases. Analyzing these errors informs improvements in feature engineering, data quality, or model adjustments.

- Threshold Tuning – Adjusting decision thresholds in classification models balances precision and recall. Optimizing these thresholds aligns model predictions with specific business requirements or preferences.

- Bias and Fairness Testing – Testing models for bias and fairness ensures equitable predictions across different demographic groups. This helps identify and rectify biases that might lead to unfair outcomes.

- Documentation and Reporting – Documenting testing procedures, results, and any observed anomalies or limitations ensures transparency and aids in communicating findings to stakeholders.

Comprehensive testing is integral to ensure ML models function effectively, accurately, and ethically in real-world applications. It validates model reliability, assists in identifying improvements, and ensures alignment with business objectives and ethical considerations.

Optimization

Continuous improvement is key. Optimization involves fine-tuning models, updating algorithms, and reiterating through the life cycle to enhance model performance as new data becomes available.

- Hyperparameter Tuning – Adjusting hyperparameters—parameters external to the model that affect its behavior—enhances model performance. Techniques like grid search or random search explore various combinations to find the best settings.

- Algorithm Selection and Optimization – Choosing the most suitable algorithm for the problem domain and optimizing its parameters can significantly impact model performance. This involves experimenting with different algorithms and configurations.

- Feature Selection and Engineering Refinement – Refining feature engineering techniques or selecting more informative features improves model accuracy. Iterative exploration of feature sets helps identify those most relevant to the problem.

- Ensemble Methods Refinement – Fine-tuning ensemble methods like bagging, boosting, or stacking by adjusting individual model weights or parameters often leads to improved predictive power.

- Model Architecture Optimization – For deep learning models, adjusting architecture layers, nodes, or activation functions can enhance performance. Techniques like transfer learning or neural architecture search (NAS) optimize model design.

- Regularization and Dropout Tuning – Applying regularization techniques or adjusting dropout rates in neural networks prevents overfitting and improves model generalization to unseen data.

- Optimizing Training and Inference Processes – Improving the efficiency of training and inference by optimizing batch sizes, learning rates, or using hardware accelerators like GPUs/TPUs reduces computational time and costs.

- AutoML and Automated Optimization – Leveraging AutoML tools or automated optimization platforms streamlines the process by automatically exploring multiple configurations and selecting optimal models.

- Continuous Monitoring and Retraining – Regularly monitoring model performance and retraining models with new data or updated strategies maintains model accuracy and relevance over time.

- Scaling for Large Datasets – Optimizing algorithms and infrastructures to handle large volumes of data efficiently ensures models can scale without compromising performance.

- Ethical Considerations in Optimization – Ensuring fairness, transparency, and mitigating biases during optimization aligns with ethical principles in deploying ML models.

- Documentation and Reporting – Documenting optimization processes, parameters tuned, and resulting improvements ensures transparency and aids in replication or future optimizations.

Optimization in ML involves a continuous cycle of refining models, algorithms, and processes to achieve superior performance, scalability, and efficiency while aligning with ethical considerations. It’s a vital step toward deploying effective and impactful ML solutions.

Conclusion

Each step in the ML life cycle is interdependent, with iterations and feedback loops often present between them, refining and improving the model’s efficacy. Understanding and executing these steps meticulously can lead to robust and effective machine learning solutions. As the realm of ML continues to evolve, mastering this life cycle remains pivotal, ensuring the seamless integration of machine learning solutions into diverse domains.