Reinforcement learning is a powerful approach to machine learning that enables agents to learn through interactions with an environment. It has gained significant attention and popularity due to its ability to tackle complex problems and achieve remarkable results. In this article, we will explore the fundamentals of reinforcement learning, its components, algorithms, applications, and future directions.

Introduction to Reinforcement Learning

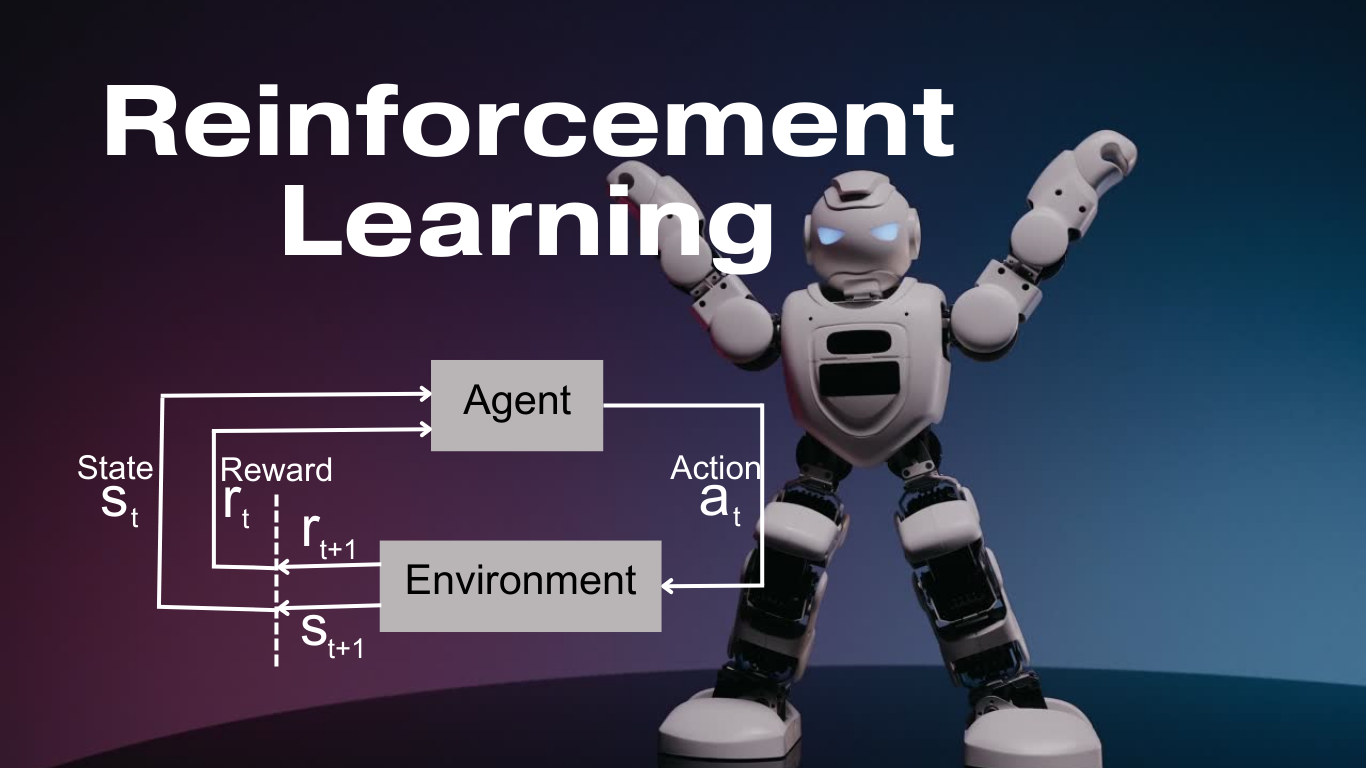

Reinforcement learning is a branch of machine learning that focuses on how agents learn from their interactions with an environment to maximize cumulative rewards. Unlike supervised learning, where the agent is provided with labeled examples, or unsupervised learning, where the agent learns patterns from unlabeled data, reinforcement learning involves learning through trial and error.

The goal of reinforcement learning is for an agent to learn an optimal policy—a strategy that dictates the agent’s actions based on its observations—to maximize the rewards it receives from the environment. Through repeated interactions, the agent learns to take actions that lead to favorable outcomes and avoid actions that lead to negative outcomes.

Key Concepts in Reinforcement Learning

Agents and Environments

In reinforcement learning, an agent interacts with an environment. The agent receives observations from the environment, takes actions, and receives rewards in return. The environment can be anything from a simulated world in a computer program to a physical robot navigating the real world.

Rewards

Rewards are a crucial component of reinforcement learning. They provide a measure of the desirability or quality of an agent’s actions. Positive rewards reinforce actions that lead to favorable outcomes, while negative rewards discourage undesired behavior. The goal of the agent is to maximize the cumulative reward over time.

Policies

Policies define the agent’s behavior. A policy maps states to actions, determining what action the agent should take in a given state. Policies can be deterministic, where each state is associated with a single action, or stochastic, where actions are chosen probabilistically based on the policy.

Value Functions

Value functions estimate the expected rewards an agent can achieve from a given state or state-action pair. They play a crucial role in reinforcement learning algorithms by guiding the agent’s decision-making process. The two main types of value functions are the state value function (V(s)) and the action value function (Q(s, a)).

Markov Decision Processes (MDPs)

Markov Decision Processes (MDPs) provide a mathematical framework for modeling reinforcement learning problems. MDPs define the properties of the environment and the agent’s interactions within that environment.

State Spaces

The state space represents the set of possible states the agent can be in. It captures all the relevant information the agent needs to make decisions. States can be discrete, where each state is distinct and separate, or continuous, where states exist on a continuous spectrum.

Action Spaces

The action space defines the set of possible actions the agent can take in a given state. Actions can also be discrete or continuous, depending on the problem at hand.

Transition Models

Transition models describe the dynamics of the environment. They define the probability of transitioning from one state to another when the agent takes a particular action. Transition models are often represented as a probability distribution or a set of transition probabilities.

Reward Functions

The reward function specifies the reward an agent receives for being in a particular state or taking a specific action. It quantifies the desirability of different states and actions in terms of their associated rewards.

Discount Factors

Discount factors determine the importance of future rewards compared to immediate rewards. A discount factor, denoted by the symbol γ (gamma), is a value between 0 and 1. A higher discount factor places more emphasis on long-term rewards, while a lower discount factor prioritizes immediate rewards.

Q-Learning: An Algorithmic Approach

Q-Learning is a popular algorithm used in reinforcement learning to estimate action values and learn optimal policies. It is based on the concept of the Q-value, which represents the expected cumulative rewards an agent can achieve by taking a particular action in a given state.

Exploration and Exploitation

The exploration-exploitation trade-off is a fundamental challenge in reinforcement learning. Agents must balance the exploration of new states and actions to gather more information with the exploitation of their current knowledge to maximize rewards. Strategies such as the epsilon-greedy approach address this trade-off.

The Q-Value Iteration Process

The Q-value iteration process is an iterative algorithm used to estimate the optimal Q-values for each state-action pair. It involves updating the Q-values based on the Bellman equation, which expresses the relationship between the current Q-value and the expected future Q-values.

The Epsilon-Greedy Strategy

The epsilon-greedy strategy is a commonly used exploration strategy in reinforcement learning. It involves selecting the action with the highest Q-value most of the time (exploitation) and occasionally selecting a random action with a small probability ε (exploration).

Applications of Reinforcement Learning

Reinforcement learning has found applications in various domains, including:

Autonomous Driving

Reinforcement learning can be used to train self-driving cars to navigate complex road environments, make safe decisions, and optimize fuel efficiency. Agents learn to respond to different traffic scenarios and adapt their driving behavior based on real-time observations.

Robotics

Reinforcement learning enables robots to learn complex tasks and manipulate objects in real-world environments. Robots can learn to grasp objects, walk, and perform intricate movements through trial and error, reducing the need for explicit programming.

Game Playing

Reinforcement learning has demonstrated remarkable success in game playing. From classic board games to complex video games, agents powered by reinforcement learning algorithms have achieved superhuman performance. AlphaGo’s victory over world champion Go player Lee Sedol is a notable example.

Recommendation Systems

Reinforcement learning techniques can be employed in recommendation systems to personalize user experiences. By continuously learning from user feedback, agents can optimize recommendations and improve user satisfaction.

Healthcare

Reinforcement learning holds promise in healthcare applications, such as personalized treatment recommendation and optimization of medical interventions. Agents can learn from patient data and clinical guidelines to make informed decisions and improve patient outcomes.

Challenges and Limitations of Reinforcement Learning

Despite its successes, reinforcement learning faces several challenges and limitations:

The Exploration-Exploitation Trade-Off

Finding the right balance between exploration and exploitation remains a challenge. Agents must explore sufficiently to discover optimal policies while exploiting existing knowledge to maximize rewards. Inadequate exploration can lead to suboptimal solutions.

Sparse Rewards

Reinforcement learning often struggles with sparse rewards, where the agent receives feedback only occasionally or when specific conditions are met. Sparse rewards make learning more challenging as agents have limited information to guide their decision-making.

Safety and Ethics

Reinforcement learning algorithms must account for safety and ethical considerations. Agents should learn behaviors that adhere to societal norms, avoid harm to humans, and prevent undesirable outcomes.

Sample Inefficiency

Reinforcement learning algorithms typically require a large number of interactions with the environment to learn effective policies. Sample inefficiency can hinder the applicability of reinforcement learning in real-world scenarios, where gathering data can be expensive or time-consuming.

Future Directions and Advances in Reinforcement Learning

Reinforcement learning continues to evolve, and several exciting directions and advances are shaping its future:

Deep Reinforcement Learning

Deep reinforcement learning combines reinforcement learning with deep neural networks, enabling agents to learn directly from raw sensory inputs. Deep RL has achieved remarkable successes, such as learning to play complex video games and mastering challenging control tasks.

Transfer Learning

Transfer learning aims to leverage knowledge learned in one task or environment to improve performance in another related task or environment. By transferring learned policies or value functions, agents can accelerate learning in new scenarios.

Multi-Agent Reinforcement Learning

Multi-agent reinforcement learning explores how multiple agents can learn and interact in a shared environment. It has applications in scenarios where multiple agents must collaborate or compete, such as in multi-robot systems or economic simulations.

Meta-Learning

Meta-learning focuses on learning how to learn. Agents develop meta-policies that can adapt quickly to new tasks or environments. Meta-learning aims to improve the efficiency and generalization capabilities of reinforcement learning algorithms.

Conclusion

Reinforcement learning is a fascinating field of study that offers powerful tools for training intelligent agents. By combining trial and error with rewards, agents can learn optimal strategies for solving complex problems. With advancements in algorithms, computing power, and real-world applications, reinforcement learning continues to push the boundaries of what machines can achieve. Whether it’s autonomous driving, robotics, game playing, or healthcare, reinforcement learning holds immense potential for shaping the future of AI.