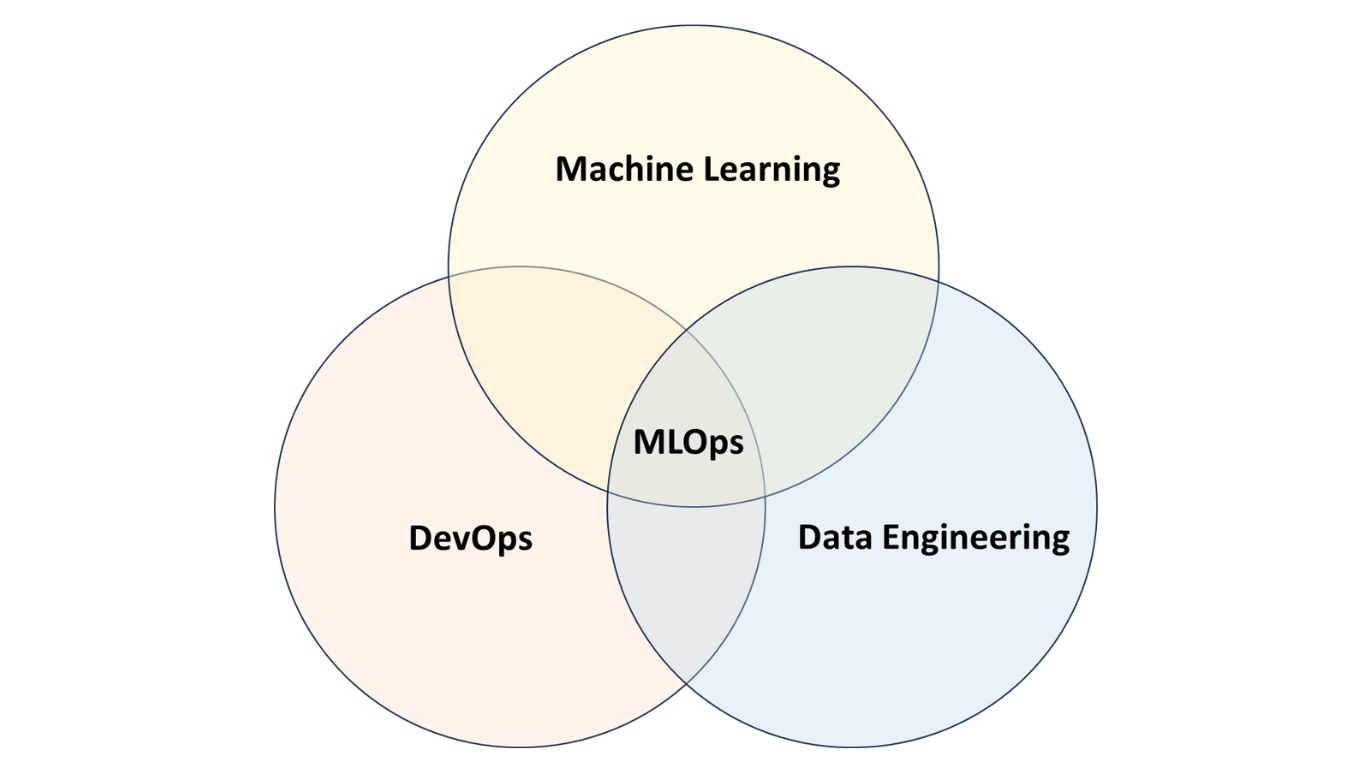

Machine Learning Operations, commonly known as MLOps, refers to the practice and discipline within machine learning that aims to unify and streamline the machine learning system operation (Ops). It involves collaboration between data scientists, ML engineers and IT professionals to automate and optimize the end-to-end lifecyle of machine learning applications. In this blog, we’ll delve into key aspects of MLOps, focusing on data management, version control, automation (pipeline), experiment tracking, CI/CD, monitoring/retraining, provisioning, and governance.

What is MLOps?

Data Management in MLOps

Effective data management is the backbone of successful MLOps implementation. It involves handling data with precision, ensuring its quality, integrity, and security. MLOps teams must establish robust data pipelines, implement data versioning, and address concerns related to data privacy and compliance.

Version Control for Machine Learning Models

Version control is not just for code; it’s equally vital for ML models. MLOps leverages version control systems to track changes made to models, enabling collaboration among data scientists. This ensures reproducibility and traceability, crucial for maintaining a reliable ML lifecycle.

Automation (Pipeline) in MLOps

Automation, particularly in the form of pipelines, plays a pivotal role in MLOps. Automated pipelines streamline the end-to-end ML workflow, from data preprocessing to model deployment. This not only enhances efficiency but also reduces the chances of manual errors, ensuring consistency in model deployment.

Experiment Tracking in MLOps

Keeping track of experiments is essential for understanding the performance of various models and their iterations. MLOps incorporates experiment tracking tools to record and analyze the outcomes of different experiments, aiding in the selection of the most effective model for deployment.

CI/CD Practices in MLOps

Continuous Integration and Continuous Deployment (CI/CD) practices, borrowed from software development, are integral to MLOps. CI/CD ensures that changes to ML models are automatically tested and deployed, reducing the time from development to production and enhancing the agility of the MLOps workflow.

Monitoring and Retraining in MLOps

Once a model is in production, MLOps emphasizes continuous monitoring. This involves tracking the model’s performance in real-world scenarios and retraining it when necessary to adapt to changing data patterns. Monitoring and retraining are crucial for maintaining model accuracy over time.

Provisioning Resources for MLOps

Provisioning resources involves ensuring that the necessary infrastructure is available for ML workflows. MLOps teams need to efficiently allocate computing resources, manage dependencies, and scale infrastructure based on the demands of ML projects. Cloud services are often leveraged for flexible resource provisioning.

Governance in MLOps

Governance in MLOps focuses on establishing policies and controls to ensure that ML workflows align with organizational goals and comply with regulations. This includes managing access to data, defining model deployment procedures, and implementing measures to address ethical considerations in AI.

Exploring the Benefits

Enhanced Collaboration Between Teams

MLOps bridges the gap between data science and IT operations, fostering seamless collaboration. By breaking down silos, MLOps ensures that data scientists, developers, and operations teams work in tandem, contributing to a more efficient and cohesive workflow.

Improved Model Performance and Reliability

Implementing MLOps practices results in enhanced model performance and reliability. Through systematic testing, continuous monitoring, and feedback loops, organizations can proactively address issues, ensuring that ML models deliver accurate and reliable predictions in real-world scenarios.

Accelerated Time to Market

Speed is of the essence in today’s competitive landscape. MLOps streamlines the deployment of machine learning models, significantly reducing the time it takes to bring innovative solutions to market. This agility is particularly crucial in industries where staying ahead of the competition is paramount.

Efficient Resource Utilization

MLOps enables organizations to efficiently allocate and manage computing resources. With the ability to scale infrastructure based on project demands, businesses can optimize resource usage, ensuring cost-effectiveness and flexibility in handling varying workloads.

Continuous Integration and Continuous Deployment (CI/CD)

The adoption of CI/CD practices from software development in MLOps automates the testing and deployment of machine learning models. This not only reduces the risk of errors but also ensures a faster and more reliable deployment process, contributing to a more agile development cycle.

Adaptive Monitoring and Retraining

MLOps emphasizes continuous monitoring of deployed models in real-world environments. This adaptive monitoring allows organizations to detect performance deviations and retrain models promptly. By staying attuned to changing data patterns, MLOps ensures sustained accuracy over time.

Enhanced Scalability for Large-Scale Projects

MLOps is designed to scale, making it suitable for large-scale machine learning projects. By implementing robust MLOps pipelines, organizations can handle the complexity and volume of data associated with extensive ML initiatives without compromising efficiency.

Strengthened Security and Compliance Measures

As machine learning models become integral to critical business processes, ensuring security and compliance is paramount. MLOps addresses these concerns by incorporating measures to protect sensitive data, manage access controls, and adhere to regulatory requirements, instilling confidence in stakeholders.

Streamlined Experimentation and Innovation

Experimentation is a key aspect of machine learning development. MLOps facilitates streamlined experiment tracking, allowing organizations to conduct experiments efficiently, analyze results, and select the most effective models for deployment. This streamlined approach encourages continuous innovation.

Future-Proofing Through Comprehensive Governance

MLOps establishes governance frameworks that define policies and controls, aligning ML workflows with organizational goals. This not only ensures compliance with regulations but also future-proofs ML initiatives by addressing ethical considerations and mitigating risks associated with data and model governance.

Conclusion

Mastering MLOps involves navigating through various elements, each crucial for the success of machine learning initiatives. From meticulous data management to implementing robust version control, automation, and governance practices, MLOps is a holistic approach that ensures the reliability, scalability, and efficiency of ML workflows.

In the ever-evolving landscape of AI and machine learning, embracing MLOps is not just a best practice; it’s a necessity for organizations aiming to derive maximum value from their ML investments.

Now, armed with insights into data management, version control, automation, and more, you’re better equipped to embark on your MLOps journey. Implementing these practices will not only streamline your ML workflows but also position your organization at the forefront of innovation in the rapidly advancing field of machine learning.